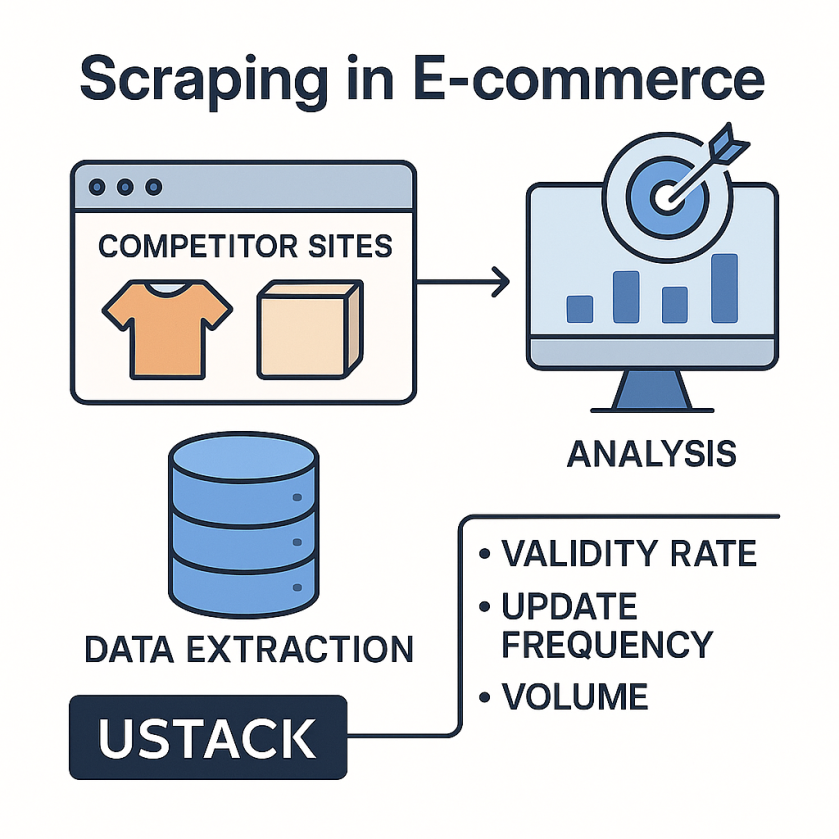

Scraping in eCommerce – How to extract data to Optimize Prices and Inventory

Learn how to apply web scraping in eCommerce to monitor prices and stock in real time a data‑driven competitive edge.

What is scraping in eCommerce?

Scraping is a technique that lets you automatically extract data from web pages. Instead of manually copying the information you see on‑screen, a script does it for you—quickly, in a structured format, and without human intervention.

In eCommerce this means access to prices, stock availability, product descriptions, customer reviews, and much more—both from your own site and from competitors’. You can continuously analyze the market, detect shifts, and tweak your strategy in real time.

The biggest advantage is autonomy: you don’t depend on anyone to share data or on official APIs (which often don’t exist or impose tight limits). With scraping, the data lives wherever the HTML does.

Concrete benefits of scraping for Your Online Store

Many online businesses operate without knowing how they compare to competitors. Others leverage scraping to maintain constant visibility. You don’t need massive infrastructure to get started; one of scraping’s virtues is how gradually it can be adopted.

With the right data you can:

- React faster to key price changes. If a competitor drops a price Monday at 10 a.m., you know that same day—not next weekend after losing sales.

- Spot stock‑outs on competitor sites and promote the same item while they’re empty‑handed.

- Identify behavior patterns—one retailer runs promos on Saturdays, another rotates featured products weekly. Scraping reveals those rhythms so you can anticipate them.

- Improve your site structure and SEO. Studying how top players present products helps refine your own product pages, categories, and copy.

“The analytics revolution isn’t just about more data; it’s about better decisions.”

—Eric Schmidt, former Google CEOScraping doesn’t merely give you more data—it gives it to you sooner, so you can act smarter.

My experience using Python for scraping

When I started, the goal was clear: pull product info from multiple tech sites so a client could gain pricing insight. The project began with 3,000 products; today it tracks 5,000+.

The stack kept evolving, but the foundation was always Python:

requests handled the HTTP calls.

lxml parsed HTML quickly and accurately. Its speed and XPath support proved invaluable—especially on messy markup.

The system runs five times a day, so if something changes at 9 a.m., the client knows by noon. I focus on harvesting and processing the data into Excel files; analysts then merge it with other sources, build charts, craft internal reports, and trigger business actions.

The toughest challenge was anti‑bot defenses. Some sites blocked IPs or constantly reshuffled their structure. Solutions involved rotating proxies, third‑party CAPTCHA solvers, and making the code modular so it adapts fast when a site changes.

It wasn’t just a technical project—it showed me how a well‑built scraper can integrate silently yet powerfully into a commercial decision flow. Sometimes a precise Excel sheet beats a fancy dashboard.

How scraping evolves depending on the site

Sites aren’t created equal—that’s a lesson learned over time. Some eCommerce stores have neat, predictable IDs and classes; others redesign frequently or use JavaScript for dynamic content.

- Simple sites: A basic Python + lxml scraper often suffices.

- Complex sites: You’ll need to render pages like a real browser. Tools such as Selenium or Playwright come into play.

- Adaptive sites: Some alter HTML based on browser or IP. At that stage, scraping turns strategic. Start simple and add complexity only when needed—don’t solve problems you don’t have yet, but keep a plan B ready.

Legal aspects you should know

Scraping isn’t illegal per se, but it is subject to rules:

- Check the target site’s Terms & Conditions. Many explicitly forbid automated extraction.

- Respect

robots.txt. It indicates which parts of a site are crawler‑friendly. You could ignore it, but professionally and ethically you shouldn’t. - Avoid personal data. Don’t store or process user names, emails, or IPs without consent.

For a deeper dive, see the Electronic Frontier Foundation’s guide on developers’ rights and responsibilities around scraping.

Recommended tools and libraries

- requests – straightforward HTTP requests

- lxml – fast, precise HTML parsing

- pandas – structure data and export CSV/Excel

Start with those three; add selenium or playwright for dynamic content or stricter protections. The official lxml docs are an excellent starting point for mastering robust HTML manipulation.

Final tips and best practices

After years in scraping, certain lessons repeat:

- Start small—don’t try to scrape the whole internet on day one.

- Pick sites with stable structure for your first tests.

- Add logs so you know when and why something fails.

- Pace your requests—don’t fire off 1,000 per minute.

- Use headers like User‑Agent to mimic a real browser.

- Automate responsibly; never ship code you can’t maintain.

“Talk is cheap. Show me the code.”

—Linus TorvaldsEven before code, the real key is knowing what data you need and why. Scraping is the means, not the end.

About the author

Miguel Fernandez is a developer specializing in Python, web scraping, and data automation for eCommerce. He integrates extraction solutions into existing stacks with a focus on system stability and scalability.