Web scraping has become essential for companies that need to collect and analyze large amounts of information quickly.

However, many face challenges such as managing complex websites, dynamic content and efficient data management.

In this article, we’ll dive into methods that leverage R’s capabilities for efficient data collection, from managing complex pages to automating workflows.

At the end, we will provide you with useful information to improve your web scraping tasks and boost your data-driven decision-making process.

Introduction to Advanced Web Scraping in R

Web scraping in R allows data analysts and developers to gather information from websites using powerful packages tailored for data extraction.

When moving beyond basic scraping, advanced techniques become essential to handle dynamic content, session management, and data parsing efficiently.

Advanced scraping in R unlocks a vast range of possibilities for market research, sentiment analysis, and competitive intelligence.

Key Techniques for Advanced Web Scraping in R

Using Specialized Packages:

- rvest for static content scraping.

- httr for handling HTTP requests and API interactions.

- RSelenium for dynamic JavaScript-based websites.

Session and Authentication Handling:

- Maintain session persistence for websites requiring login.

- Use cookie management to bypass session resets.

Data Parsing and Transformation:

- Employ xml2 for structured XML and HTML content.

- Leverage the tidyverse for data wrangling and analysis.

Essential R Packages for Advanced Web Scraping

When engaging in advanced web scraping with R, leveraging the right tools can make the process efficient and robust. Here are some essential R packages that elevate data extraction capabilities:

Core Packages for Web Scraping

- rvest: Simplifies scraping of static HTML content with functions to extract tables, nodes, and text easily.

- httr: Provides tools for managing HTTP requests and working with APIs, supporting GET, POST, and authentication.

- RSelenium: Controls web browsers for dynamic content scraping, handling JavaScript-heavy pages and form submissions.

- xml2: Efficiently parses and navigates XML and HTML documents for structured data extraction.

Automate data extraction and save time on repetitive tasks!

Recommended Reading: What Makes a Web Scraping Framework the Best?

Supporting Packages for Data Processing

- tidyverse: Offers a comprehensive suite for data manipulation and visualization, ideal for cleaning and analyzing scraped data.

- stringr: Facilitates string manipulation for cleaning and structuring extracted text.

- jsonlite: Simplifies working with JSON data from APIs or embedded web content.

Additional Tools for Advanced Needs

- robotstxt: Checks a website’s robots.txt file to ensure ethical scraping.

- curl: Handles low-level HTTP requests for more control over the data fetching process.

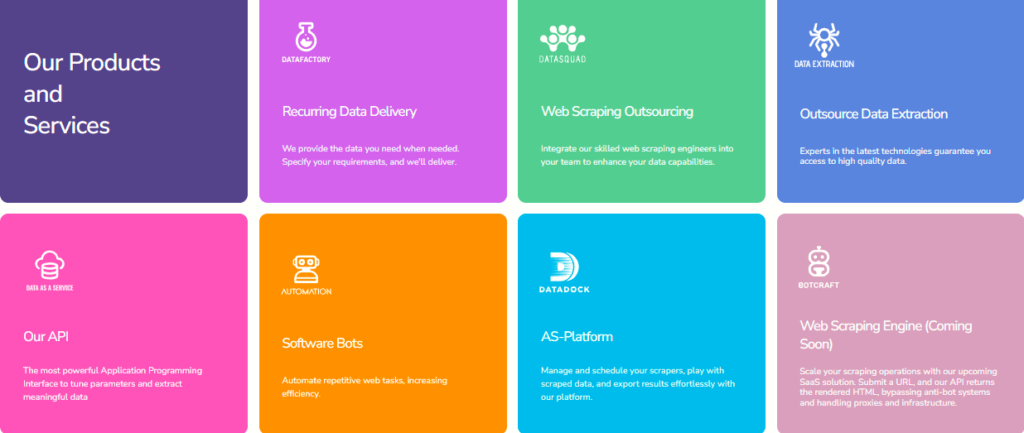

Automating Competitor Analysis with BotCraft and R

In today’s competitive landscape, staying ahead means continuously monitoring what your competitors are doing. By automating competitor analysis using tools like BotCraft and R, businesses can gain real-time insights into market trends, pricing strategies, and product offerings.

| Step | Description | Tools Involved |

| Setting Up BotCraft | Configure BotCraft for automated web scraping tasks to gather competitor data (prices, availability, reviews). | BotCraft |

| Leveraging R for Data Processing | Import scraped data into R for cleaning, transformation, and analysis using packages like dplyr and tidyverse. | R, dplyr, tidyverse |

| Real-Time Monitoring & Reporting | Schedule and automate real-time data extraction with BotCraft, then generate reports or dashboards in R to track competitor strategies. | BotCraft, R |

| Visualizing Insights | Use ggplot2 in R to visualize competitor data (price trends, customer sentiment) for better decision-making. | R, ggplot2 |

| Benefits | Improve efficiency, accuracy, and scalability by automating competitor analysis, providing quick, data-driven insights. | BotCraft, R |

Recommended Reading: Advantages of Web Scraping: Maximizing Efficiency in Data Extraction

Leveraging DataDock for Secure Trend Data Storage and Access

In today’s fast-paced business environment, ensuring the security and accessibility of trend data is crucial for informed decision-making.

DataDock provides a comprehensive solution for securely storing and managing data while maintaining seamless access for authorized users.

Here’s how it can be leveraged:

- Centralized Data Storage: Store all your trend data in a secure, cloud-based environment, making it easily accessible across teams while ensuring its safety.

- Real-Time Data Syncing: Keep trend data up-to-date across all your systems, allowing teams to access the most current insights and make real-time decisions.

- Seamless Data Integration: DataDock can integrate with various business tools and platforms, providing a holistic view of the data you need for analysis.

- Scalable and Flexible: Whether you’re working with large volumes of data or expanding your operations, DataDock scales with your needs, ensuring you never run out of storage or functionality.

In addition, our software features:

- Autonomy and flexibility in operations: You will be able to run and adjust crawlers on demand, quickly adapting to changes in your information needs.

- Infrastructure and maintenance management: Save on costs associated with technology management and concentrate on using the data.

If you want to know how to improve your company’s data solution, do not hesitate to Contact Us.

Recommended Readinf: Cost of Web Scraping

Companies Providing Advanced Web Scraping Solutions

When looking for advanced web scraping solutions, AutoScraping stands out as a top choice for businesses looking to automate data collection efficiently and securely. AutoScraping offers a range of benefits tailored to meet your unique needs:

- Customizable Scraping: Tailor scraping methods to suit the specific data your business needs, from market trends to competitor analysis.

- Automation: Automate the process of gathering large volumes of data, freeing up valuable time for your team to focus on decision-making and analysis.

- Real-time Data Access: Get up-to-date insights that empower your company to stay ahead in competitive industries.

- User-Friendly Interface: No technical expertise required, making it accessible for businesses of all sizes.

- Secure and Compliant: AutoScraping ensures your data collection processes are ethical and legally compliant, respecting website terms and regulations.

By choosing AutoScraping, your company can leverage cutting-edge web scraping tools with ease, enhancing productivity and driving informed decisions.

FAQs: Advanced Web Scraping in R

Is web scraping possible in R?

Yes, web scraping is possible in R using packages like rvest and httr, which allow you to extract data from websites efficiently.

What is the best R package for web scraping?

The best R package for web scraping is rvest, as it simplifies the process of web scraping with functions designed for parsing HTML and extracting content.

Is web scraping better in R or Python?

It depends on your needs. While R is user-friendly for data analysis and visualization, Python is more versatile and has a broader range of libraries, making it better for complex scraping tasks.

How to extract a table from a website using R?

You can use rvest to extract tables from websites.